1. 감성분석 소개

- 감성분석은 텍스트가 나타내는 주관적인 단어와 문맥을 기반으로 긍정, 부정 감성(sentiment) 수치를 계산하는 방법을 이용

- 지도학습과 비지도학습 방식이 있음

- 지도학습은 텍스트 기반의 이진분류와 거의 동일

- 비지도학습은 'Lexicon' 감성어휘사전을 이용하여 긍정적, 부정적 감성여부 판단

2. 지도학습 기반 감성분석 실습 - IMDB 영화평

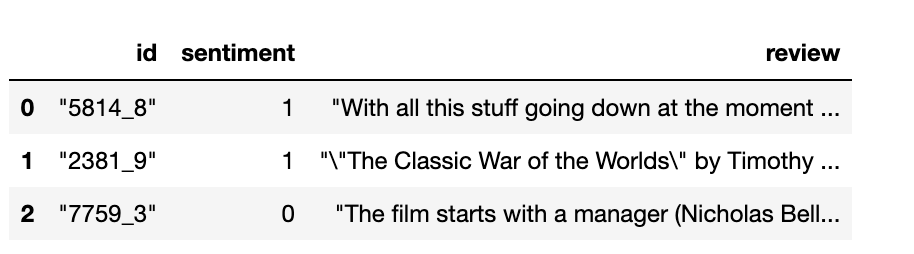

- 캐글의 labeledTrainData.tsv는 탭(\t) 문자로 분리되어 있는 파일, DataFrame 으로 변환

- 생성된 데이터 프레임의 칼럼

- id : 각 데이터의 id

- sentiment : Review 의 sentiment 결과 값 1(긍정적), 0(부정적)

- review : 영화평의 텍스트

import pandas as pd

review_df = pd.read_csv('labeledTrainData.tsv', header=0, sep="\t", quoting=3)

review_df.head(3)

- 텍스트 구성 확인

- <br \>태그 , 숫자, 특수문자 존재

print(review_df['review'][0])

[output]

"With all this stuff going down at the moment with MJ i've started listening to his

music, watching the odd documentary here and there, watched The Wiz and watched Moonwalker again. Maybe i just want to get a certain insight

into this guy who i thought was really cool in the eighties just to maybe make up my mind whether he is guilty or innocent. Moonwalker is part biography,

part feature film which i remember going to see at the cinema when it was originally released. Some of it has subtle messages about MJ's feeling towards the

press and also the obvious message of drugs are bad m'kay.<br /><br />Visually impressive but of course this is all about Michael Jackson so unless you remotely

like MJ in anyway then you are going to hate this and find it boring. Some may call MJ an egotist for consenting to the making of this movie BUT MJ and most of

his fans would say that he made it for the fans which if true is really nice of him.<br /><br />The actual feature film bit when it finally starts is only on for 20 minutes

or so excluding the Smooth Criminal sequence and Joe Pesci is convincing as a psychopathic all powerful drug lord. Why he wants MJ dead so bad is beyond me. Because MJ overheard his plans?

Nah, Joe Pesci's character ranted that he wanted people to know it is he who is supplying drugs etc so i dunno, maybe he just hates MJ's music.<br /><br />Lots of cool things in this like MJ turning into a car

and a robot and the whole Speed Demon sequence.

Also, the director must have had the patience of a saint when it came to filming the kiddy Bad sequence as usually directors hate working with one kid let alone a whole bunch of them performing a complex dance scene.

<br /><br />Bottom line, this movie is for people who like MJ on one level or another (which i think is most people). If not, then stay away. It does try and give off a wholesome message and ironically MJ's bestest buddy in this movie is a girl!

Michael Jackson is truly one of the most talented people ever to grace this planet but is he guilty? Well, with all the attention i've gave this subject....hmmm well i don't know because people can be different behind closed doors, i know this for a fact.

He is either an extremely nice but stupid guy or one of the most sickest liars. I hope he is not the latter."

- <br \>태그 삭제, 숫자/특수문자는 정규표현식으로 찾고 공란으로 변경

- 파이썬의 re 모듈은 편리하게 정규표현식 지원

import re

#<br> html 태그는 replace 함수로 공백으로 변환

review_df['review'] = review_df['review'].str.replace('<br />', ' ')

#파이썬의 정규표현식 모듈인 re를 이용해 영어 문자열이 아닌 문자는 모두 공백으로 변환

review_df['review'] = review_df['review'].apply(lambda x : re.sub('[^a-zA-Z]', ' ', x))

- sentiment 칼럼을 별도로 추출하여 레이블 데이터 세트 추출, 피처 데이터 세트 생성, 학습/훈련 데이터 세트 분리

- 학습용 데이터 17500개 리뷰, 테스트용 데이터 7500개 리뷰로 구성

from sklearn.model_selection import train_test_split

class_df = review_df['sentiment']

feature_df = review_df.drop(['id', 'sentiment'], axis=1, inplace=False)

X_train,X_test,y_train,y_test = train_test_split(feature_df, class_df, test_size=0.3, random_state=156)

X_train.shape, X_test.shape

[output]

((17500, 1), (7500, 1))

- Pipeline 객체를 이용하여 피처벡터화, ML 분류 알고리즘 학습/예측/평가

# pipeline 객체를 이용한 피처 벡터화, ML 학습/예측/평가

from sklearn.feature_extraction.text import CountVectorizer, TfidfVectorizer

from sklearn.pipeline import Pipeline

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import accuracy_score, roc_auc_score

#스톱 워드는 English, filtering, ngram은 (1,2)로 설정해 CountVectorizer 수행

#LogisticRegression 의 C는 10으로 설정

pipeline = Pipeline([

('cnt_vect', CountVectorizer(stop_words='english', ngram_range=(1,2))),

('lr_clf', LogisticRegression(solver='liblinear', C=10))])

#Pipeline 객체를 이용해 fit(),predict()로 학습/예측 수행, predict_prob()는 roc_auc 때문에 수행

pipeline.fit(X_train['review'], y_train)

pred = pipeline.predict(X_test['review'])

pred_probs = pipeline.predict_proba(X_test['review'])[:,1]

print('예측 정확도는 {0:.4f}, ROC-AUC는 {1:.4f}'.format(accuracy_score(y_test, pred),

roc_auc_score(y_test, pred_probs)))

[output]

예측 정확도는 0.8861, ROC-AUC는 0.9503

- TF-IDF 기반 피처 벡터화의 예측 성능이 조금 더 나아짐

#스톱 워드는 English, filtering, ngram은 (1,2)로 설정해 TF-IDF 벡터화 수행

#LogisticRegression 의 C는 10으로 설정

pipeline = Pipeline([

('tfidf_vect', TfidfVectorizer(stop_words='english', ngram_range=(1,2))),

('lr_clf', LogisticRegression(solver='liblinear', C=10))])

pipeline.fit(X_train['review'], y_train)

pred = pipeline.predict(X_test['review'])

pred_probs = pipeline.predict_proba(X_test['review'])[:,1]

print('예측 정확도는 {0:.4f}, ROC-AUC는 {1:.4f}'.format(accuracy_score(y_test, pred),

roc_auc_score(y_test, pred_probs)))

[output]

예측 정확도는 0.8936, ROC-AUC는 0.9598Ref) 파이썬 머신러닝 완벽가이드

'데이터 > 머신러닝' 카테고리의 다른 글

| [NLP] Chapter 8 | 텍스트 분석 (네이버 영화 평점 감성 분석) (0) | 2023.02.11 |

|---|---|

| [NLP] Chapter 8 | 텍스트 분석 (비지도학습 기반 감성 분석) (0) | 2023.02.05 |

| [NLP] Chapter 8 | 텍스트 분석 (텍스트 분류) (1) | 2023.02.03 |

| [NLP] Chapter 8 | 텍스트 분석 (소개 및 기반지식) (1) | 2023.02.02 |

| [RecSys] Chapter 9 | 추천시스템 (0) | 2023.02.01 |

1. 감성분석 소개

- 감성분석은 텍스트가 나타내는 주관적인 단어와 문맥을 기반으로 긍정, 부정 감성(sentiment) 수치를 계산하는 방법을 이용

- 지도학습과 비지도학습 방식이 있음

- 지도학습은 텍스트 기반의 이진분류와 거의 동일

- 비지도학습은 'Lexicon' 감성어휘사전을 이용하여 긍정적, 부정적 감성여부 판단

2. 지도학습 기반 감성분석 실습 - IMDB 영화평

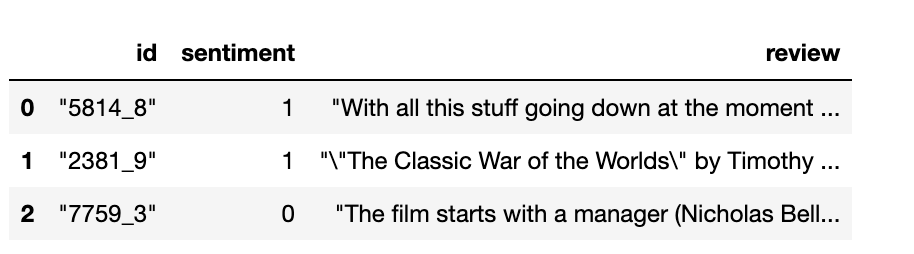

- 캐글의 labeledTrainData.tsv는 탭(\t) 문자로 분리되어 있는 파일, DataFrame 으로 변환

- 생성된 데이터 프레임의 칼럼

- id : 각 데이터의 id

- sentiment : Review 의 sentiment 결과 값 1(긍정적), 0(부정적)

- review : 영화평의 텍스트

import pandas as pd

review_df = pd.read_csv('labeledTrainData.tsv', header=0, sep="\t", quoting=3)

review_df.head(3)

- 텍스트 구성 확인

- <br \>태그 , 숫자, 특수문자 존재

print(review_df['review'][0])

[output]

"With all this stuff going down at the moment with MJ i've started listening to his

music, watching the odd documentary here and there, watched The Wiz and watched Moonwalker again. Maybe i just want to get a certain insight

into this guy who i thought was really cool in the eighties just to maybe make up my mind whether he is guilty or innocent. Moonwalker is part biography,

part feature film which i remember going to see at the cinema when it was originally released. Some of it has subtle messages about MJ's feeling towards the

press and also the obvious message of drugs are bad m'kay.<br /><br />Visually impressive but of course this is all about Michael Jackson so unless you remotely

like MJ in anyway then you are going to hate this and find it boring. Some may call MJ an egotist for consenting to the making of this movie BUT MJ and most of

his fans would say that he made it for the fans which if true is really nice of him.<br /><br />The actual feature film bit when it finally starts is only on for 20 minutes

or so excluding the Smooth Criminal sequence and Joe Pesci is convincing as a psychopathic all powerful drug lord. Why he wants MJ dead so bad is beyond me. Because MJ overheard his plans?

Nah, Joe Pesci's character ranted that he wanted people to know it is he who is supplying drugs etc so i dunno, maybe he just hates MJ's music.<br /><br />Lots of cool things in this like MJ turning into a car

and a robot and the whole Speed Demon sequence.

Also, the director must have had the patience of a saint when it came to filming the kiddy Bad sequence as usually directors hate working with one kid let alone a whole bunch of them performing a complex dance scene.

<br /><br />Bottom line, this movie is for people who like MJ on one level or another (which i think is most people). If not, then stay away. It does try and give off a wholesome message and ironically MJ's bestest buddy in this movie is a girl!

Michael Jackson is truly one of the most talented people ever to grace this planet but is he guilty? Well, with all the attention i've gave this subject....hmmm well i don't know because people can be different behind closed doors, i know this for a fact.

He is either an extremely nice but stupid guy or one of the most sickest liars. I hope he is not the latter."

- <br \>태그 삭제, 숫자/특수문자는 정규표현식으로 찾고 공란으로 변경

- 파이썬의 re 모듈은 편리하게 정규표현식 지원

import re

#<br> html 태그는 replace 함수로 공백으로 변환

review_df['review'] = review_df['review'].str.replace('<br />', ' ')

#파이썬의 정규표현식 모듈인 re를 이용해 영어 문자열이 아닌 문자는 모두 공백으로 변환

review_df['review'] = review_df['review'].apply(lambda x : re.sub('[^a-zA-Z]', ' ', x))

- sentiment 칼럼을 별도로 추출하여 레이블 데이터 세트 추출, 피처 데이터 세트 생성, 학습/훈련 데이터 세트 분리

- 학습용 데이터 17500개 리뷰, 테스트용 데이터 7500개 리뷰로 구성

from sklearn.model_selection import train_test_split

class_df = review_df['sentiment']

feature_df = review_df.drop(['id', 'sentiment'], axis=1, inplace=False)

X_train,X_test,y_train,y_test = train_test_split(feature_df, class_df, test_size=0.3, random_state=156)

X_train.shape, X_test.shape

[output]

((17500, 1), (7500, 1))

- Pipeline 객체를 이용하여 피처벡터화, ML 분류 알고리즘 학습/예측/평가

# pipeline 객체를 이용한 피처 벡터화, ML 학습/예측/평가

from sklearn.feature_extraction.text import CountVectorizer, TfidfVectorizer

from sklearn.pipeline import Pipeline

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import accuracy_score, roc_auc_score

#스톱 워드는 English, filtering, ngram은 (1,2)로 설정해 CountVectorizer 수행

#LogisticRegression 의 C는 10으로 설정

pipeline = Pipeline([

('cnt_vect', CountVectorizer(stop_words='english', ngram_range=(1,2))),

('lr_clf', LogisticRegression(solver='liblinear', C=10))])

#Pipeline 객체를 이용해 fit(),predict()로 학습/예측 수행, predict_prob()는 roc_auc 때문에 수행

pipeline.fit(X_train['review'], y_train)

pred = pipeline.predict(X_test['review'])

pred_probs = pipeline.predict_proba(X_test['review'])[:,1]

print('예측 정확도는 {0:.4f}, ROC-AUC는 {1:.4f}'.format(accuracy_score(y_test, pred),

roc_auc_score(y_test, pred_probs)))

[output]

예측 정확도는 0.8861, ROC-AUC는 0.9503

- TF-IDF 기반 피처 벡터화의 예측 성능이 조금 더 나아짐

#스톱 워드는 English, filtering, ngram은 (1,2)로 설정해 TF-IDF 벡터화 수행

#LogisticRegression 의 C는 10으로 설정

pipeline = Pipeline([

('tfidf_vect', TfidfVectorizer(stop_words='english', ngram_range=(1,2))),

('lr_clf', LogisticRegression(solver='liblinear', C=10))])

pipeline.fit(X_train['review'], y_train)

pred = pipeline.predict(X_test['review'])

pred_probs = pipeline.predict_proba(X_test['review'])[:,1]

print('예측 정확도는 {0:.4f}, ROC-AUC는 {1:.4f}'.format(accuracy_score(y_test, pred),

roc_auc_score(y_test, pred_probs)))

[output]

예측 정확도는 0.8936, ROC-AUC는 0.9598Ref) 파이썬 머신러닝 완벽가이드

'데이터 > 머신러닝' 카테고리의 다른 글

| [NLP] Chapter 8 | 텍스트 분석 (네이버 영화 평점 감성 분석) (0) | 2023.02.11 |

|---|---|

| [NLP] Chapter 8 | 텍스트 분석 (비지도학습 기반 감성 분석) (0) | 2023.02.05 |

| [NLP] Chapter 8 | 텍스트 분석 (텍스트 분류) (1) | 2023.02.03 |

| [NLP] Chapter 8 | 텍스트 분석 (소개 및 기반지식) (1) | 2023.02.02 |

| [RecSys] Chapter 9 | 추천시스템 (0) | 2023.02.01 |